Other

Botnet communication over Twitter, Reddit, social web

Botnet, a network of software robots, is typically associated with trojan or worm infected computers used to perform the bidding of their master — spam, malware, DDoS. And while the common application is grossly unethical and damaging, the academic study of a cluster of software nodes, working as a group, is fascinating.

Given the popularity of the IRC protocol for communication between the infected computers, I thought it might be an interesting thought experiment to consider other means of communication. On a locked down corporate network, blocking all but a few essential ports, HTTP is typically let through; I guess people need to access web pages for research and other business needs. Without setting up any of our own control servers, lets see what existing web services one could use to spawn a web of online mischief!

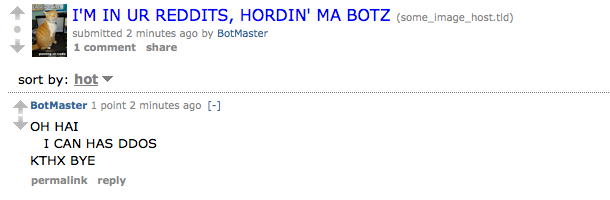

Botnet over Twitter

Twitter might prove to be an ideal service, as it is already meant for post-on-web social communication not just between humans, but bots also.

Twitter’s API makes it very easy for software to access and post all the vital information.

The service itself comes build-in with the concept of following specific accounts — allowing one to setup the network layout entirely within the webservice itself.

The functionality for replies, direct messages, and private profiles are just gravy.

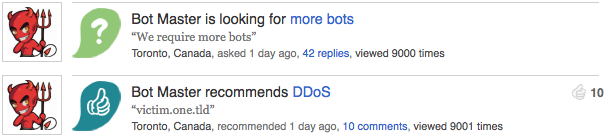

Botnet over Reddit

A clever use of cats and captions might let Reddit submissions live long enough to be read by the bot army

Just like every social-network website, Reddit comes with a set of “friends”, and even private custom reddits that can be used as “channels” to communicate in.

Simple and clean HTML markup makes it easy to parse the contents of the page.

Though the zealous community is quick to point out any suspiciously spammy activity; And Proggit members will likely hijack any control in place.

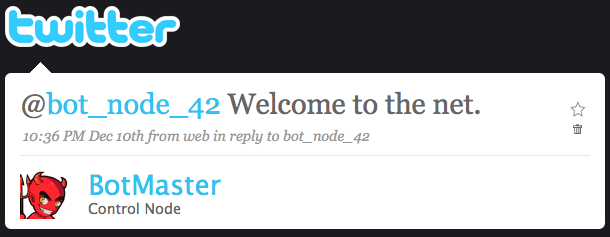

Botnet over GigPark, et. al.

GigPark could be used to request and find recommendations for Handyman, Doctors, and Denial of Service Attacks

The above ideas could be generalized to any Social Network website. GigPark’s feed of trusted recommendations is already filtered to 2 degrees of separation between the linked nodes, making filtering and discovery of new bots much easier. Friend-lists, messages, and favourite “recommendations” (which could function as a queue of tasks) rival Twitter’s toolset.

Actually GigPark’s innovative Suggested Friends feature will sync the “friends” from other social networks such as Twitter or Facebook, allowing for redundancy across multiple neworks.

Next Steps

Some downsides to this, obviously hypothetical, method involve the fact that too much reliance is placed into the host network. The webservice might be in an advantageous position to identify all the nodes; perhaps more so than an IRCop discovering the IRC channel where bots have gathered to communicate.

Another is the issue of information persistence. Web applications will typically keep the entire history of commands online. While privacy options that some social networks supply might hide some (or even all) of the activity from the public, some extra work needs to be done to hide the information from the host itself. Obfuscation, encoding, and the liberal use of “delete” options will scatter the data though the access logs, making it reasonably more difficult to trace the activity, rather than simply taking a snapshot of the database. Some bots don’t enjoy being studied by security researches, so they might be more exposed here.

Finally, going back to the issue of security and the corporate firewall — there will likely be a proxy server filtering access to certain websites. Some might be blocked because they distract employees (Facebook, MySpace, etc), others might match on some content. Though with a simple goal of communication, one just needs to find an online service that is trusted-enough to be widely accessible, and some means of getting it to display your supplied information.

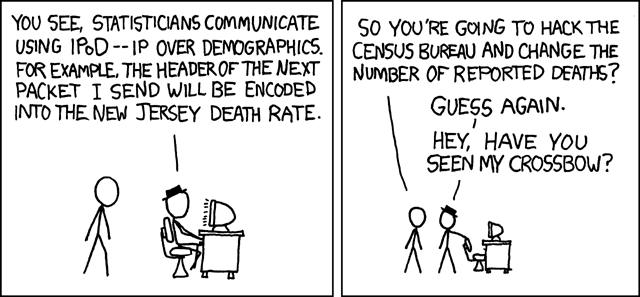

This doesn’t even have to be accomplished by online POST requests, but now we are getting into the XKCD territory of Cuils.

This is asking for a proof of concept, Tony.

Reply to comment

Why not just use XML-RPC, and stick some sneaky code into a publicly accessible web server? That seems much less contrived…

Of course, the biggest issue I can see with this is that HTTP communication requires polling… which slightly increases the complexity of any bot program. You need to make sure the bot is inconspicuous enough or otherwise a sysadmin might see the logs and wonder why someone keeps connecting to twitter every 5 minutes on the :00.

Reply to comment

This is certainly a very interesting idea. Of course it all comes down to how crafty the communications are – and if access to those sites is actually allowed or not. Alternatively, the botnet owner could also set up their own ’social networking’ portal.

Reply to comment

Way ahead of you, lol. I remember reading a book about encryption combined with malware, and the possible ways. It was all thought-experiment stuff, mostly as security research. One of the key concepts they found was that it was entirely possible to have a specific url purchased that is a cryptographic determination(from the date), and at this url is a publically accessible BBS. The bots/masters can post encrypted communications and they showed that unless there was a mistake in the encryption algorithms, it would be nigh impossible to round up the author. He even explored methods of viruses ransoming off encrypted data. Luckily, the virus writers and bot writers so far are pretty dumb, relatively. Most ransomware is badly coded, and uses a predetermined public key, instead of a hashed public key. Interesting ideas though!

Reply to comment

I wouldn’t be surprised if some communications are already being facilitated by twitter. Although, there are much more efficient and effective mechanisms for managing and spreading bots.

Let’s be clear on one thing. Some of the recent randsomware does have flaws. Most of the original source malware and bots are professional done, CVS archives, and source management. Your statement is true for the all the skiddies that steal the code and try to use it.

Malware and bots are strictly a numbers game. Don’t let anyone ever tell you differently: it’s always about the money.

Reply to comment

I think I found a twitter botnet… http://twitter.com/masterconsole

Tweets by this user are frequent, and include messages like:

#mcp: $cmd=ban $drone=1620 $reason: host is a virtual machine (honey pot?) $code: 4122 $job=786620430-12038#mcp: $cmd=install $scope=gateway $payload=252 2629898148293275722137311933159247363679864621 5724588#mcp: $job=71224324-20007 completed in 2 cpu days.What's the matter, @SarkProgram? You look nervous.(a reference to the movie Tron)Reply to comment

Michael hit the nail on the head. Modern botnets are using two things as Command and Controls: P2P protocols (primarily custom stuff built on top of Overnet) and http traffic stored on compromised servers.

Some relevant stuff can be read about at Brandon Enright’s site: http://noh.ucsd.edu/~bmenrigh/ (scroll down to the “Exposing Stormworm” link, I hate linking directly to things like Powerpoint files ), and an analysis of the domain name generation algorithm for the Srizbi botnet.

), and an analysis of the domain name generation algorithm for the Srizbi botnet.

-Leigh

Reply to comment

1

Reply to comment